Testing ads in Google Ads is a great way to determine if a particular tactic you want to try out will get you decent results or hurt your campaigns, without actually harming your campaigns and account.

However, there are several Google Ads mistakes you want to evade on your fun times with A/B testing. Rookie or expert, all advertisers can find themselves making the same mistakes.

Are you making any of these AB testing mistakes when running in Google Ads?

Other factors might distort your view on the split test outcome.

When starting, it is logical that you want to set your A/B testing on the right track. But, if you came to the idea of testing something that very day or shortly after, you could slip into a trap of false start and mumbo-jumbo-like results.

To dodge latent threats and avoid deceitful outcomes, you want to watch out for seasonality that you may be operating in.

Think of Black Friday, Cyber Monday, or Valentine's Day. You wouldn't want to run a split test over these periods because some unprecedented consumer behavior changes might lead to the results you see and not just the difference in one element of your ad.

If you later implement assumed better alterations to your original campaign led by a false presumption that something works, it may feel like you've opened a can of worms. It is one of the many A/B testing mistakes.

Besides special events marked with great discounts and increased consumer spending, you want to pay attention to any world or political events that can drive your customers' behavior. A thing to have in mind during these times is that temporary factors might influence the way your potential customers search and how they interact with ads.

Seeing an ad winner too early.

Watch out for announcing the winner ad prematurely.

At times, Google can interfere slightly too much. You might have experienced this in your digital marketing career. Whether you like it or not, and you probably don't, you should be wary of Google's signals. Because they can show incorrect data.

What can happen is that Google can favor one ad too early and start messing with your data. By neglecting the ad rotation section, your experiment will remain set to default and automatically optimize based on performance (at least on majority accounts).

So, if you see Google promoting one ad way more and way too soon, you will want to check that you set ads without "optimize" suggested options.

You have a small budget and want to test one ad at the time.

When holding a small budget, you might be thinking of running one ad one day and the second one on the following day and so on. With a budget of $40/day, you would spend $20 on each ad. Depending on your niche and average CPC, you might get scared that with half of what you used to spend, you can't remain at the same level.

Therefore, it makes more sense to carry out the split test at the same time. Why? Because switching ads like this means other variables will get involved, which might cause bias in the A/B experiment.

Consider running both ads at the same time, even if you are operating on a low budget.

That said, the daily budget should be spread over both ads, original and modified, no matter how low or high it may be.

You are comparing two radically different ads.

You set to run two entirely different ads, and you see a clear winner in your experiment ad. The ad is shining, the numbers are smiling, and you want to pat yourself on the back, as true PPC geniuses do.

The problem is, you might have written better copy, but you would never 100% know which elements eventually lead to the shift in consumers' behavior and numbers. What if you can top that winning ad? You will want to keep testing. And while you want to keep testing because there is room to beat the current ad, you won't know where to start and what to change. And that will end with you scratching your head instead of patting your back again.

Bypass dead ends and continue to the road of "fair testing."

What you'll want to do instead is to test a base ad against a new iteration, not totally changed ads. After examining which one performed better, repeat the A/B test with a new ad version. Then do it again. And again.

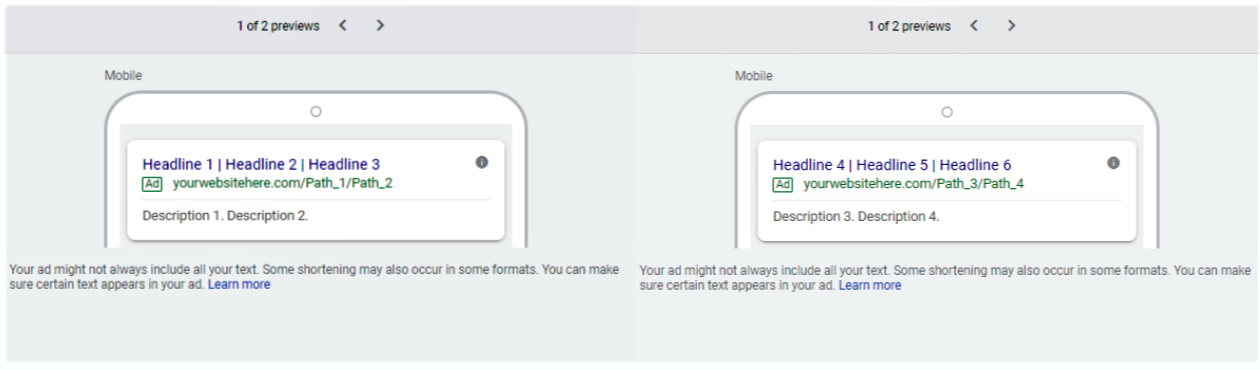

By testing one variable at a time, you can reveal an element that genuinely impacts KPIs. And you can be confident about this because you've changed just one component of your ad. Furthermore, if you have followed the 50/50 split rule, an equal number of users would have seen each ad and support your conclusion. Finally, the test would have run long enough to reach statistical significance.

A/B testing is an important tool for every PPC marketer. Our service is created for Google advertisers who are devoted to making their accounts more successful. Automating the calculation and making the ad testing work seamlessly, our tool lets advertisers focus on other areas.

Learn how to get started with our A/B test tool.