A/B testing in Google Ads is a process that refers to comparing two ads that are different in some aspects and determining which one outperforms the other one.

It's a more simplistic type of test, easy to comprehend and implement when you know precisely what you're testing and why.

Google Ads AB Testing is often done to test a single variable, where you run a test for two otherwise identical ads.

Another example of an A/B testing in Google Ads is challenging the original ad with an entirely different ad. Think of different headline, description, display URL, everything in the ad that could be modified.

Don't worry about having gigantic amounts of traffic required to run complex experiments; if you want to A/B test your ads, you can conduct it with less traffic.

Why Should You A/B Test?

Let's assume you have set up a campaign that delivers excellent results. You are satisfied with the numbers, you nurture that smooth performance that brings profit, and you don't see a need to adjust anything in your ad copy.

Why fix something if you don't see any cracks?

Some marketers are also afraid of making changes that will not put them on a roll. Instead, they think experimentation will make things worse and potentially harm the account's performance in the long-run.

While both arguments are entirely legitimate, you can also start looking at A/B testing in AdWords from another perspective.

Test the waters, then dive in head-on.

Ad testing allows you to efficiently control and monitor a change you want to question and potentially implement without completely launching it all over the campaign. If you don't like the results, you will terminate the losing ad and keep your campaign safe. The result can hurt your overall performance a certain percent of the time run (which depends on your allocated budget for variation split testing), but not 100% of that time.

Google Ads's built-in A/B split testing feature called Ads campaign experiments (formerly AdWords campaign experiments) or ACE, enables you to try ad split testing.

Become a problem solver.

You may fail in addressing and solving visitors' pain points the first time around. While in A/B testing - "third time's a charm" doesn't quite work, you can gradually move away from serving a confusing copy and press down the lousy user experience.

Increase your key metrics like CTR and conversion rate.

Everyone wants ads that will yield a higher click-through-rate. Higher CTR means more visitors and greater chances of converting.

By continuously testing Google ads and choosing winning ads, the ad performance increases incrementally. Ultimately, your overall account will improve through better ads.

The landing page/website and ad should resonate.

Copy of the ad and content on the landing page should be coherent; a user should feel that he/she came to the right place to achieve a particular goal that they have in mind. They need to instantly recognize the link between the information they received from the ad they clicked on and the destination page's information. If you believe that your destination page is top-notch, focus on testing sponsored ads.

There is a crucial thing that you have to remind yourself as you manage PPC advertising. Your ads and, most prominently, your headlines aren't supposed to sell the product or the service. The ad's part in this selling process is to sell a click. The rest is on your landing page/website.

What to test?

Start simple and start with the main thing. With an ad copy.

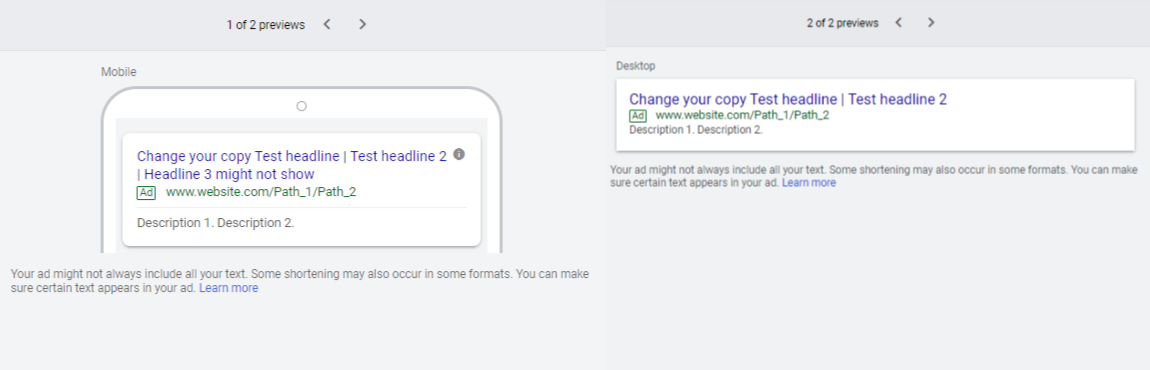

Move from the top-down. Firstly, you want to experiment with headlines. That is what users initially notice. Then you can move on to descriptions.

Other variables to consider split-testing are keywords and different targeting options for campaigns, like bidding strategy used for that particular campaign.

One of the most advisable bidding experiments you can run is to test smart bidding against manual CPC bidding.

How to split test traffic, and for how long

One of the settings you will have to define is the amount of traffic to your control and your experiment (i.e., variation or challenger).

There are different options, and you can, for example, pick to send 80% to your proven control ad and 20% to your variation ad. You can define what percentage of your budget you want to allocate to your experiment. It might take longer to reach statistical significance if you assign significantly less traffic to your challenger ad.

Going with 50/50 is a good practice, as, statistically, you'll get the right data faster.

While you can manually add an end date to your experiment, the test should run long enough to reach statistical significance. It would be best if you are eyeing a p-value, which has to be less than or equal to 5% and a confidence interval of at least 95%.

If you don't know where to start or simply can't take the time for ad testing, we have a solution for you.

With our tool, you can significantly improve your CTR and CR (We have seen improvement up to 30%!) in just a few minutes each week.

Our ad testing service compares ad performance based on your conversion and click-through rate data and calculates the winning ad. Furthermore, it prompts you to continue the test to improve your ad copy further.

To learn more about the steps we take when testing for Google Ads, check out our Features section.